39 confident learning estimating uncertainty in dataset labels

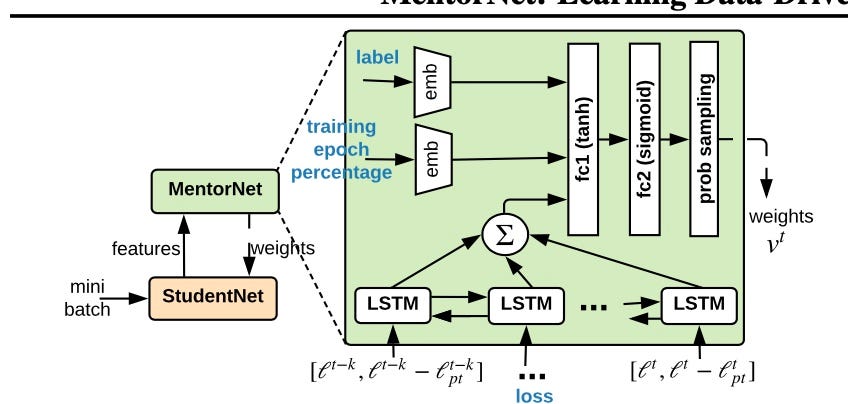

Research - Cleanlab Confident Learning: Estimating Uncertainty in Dataset Labels. Curtis Northcutt, Lu Jiang, and Isaac Chuang. Journal of Artificial Intelligence Research (JAIR), Vol. 70 (2021) Code, Blog Post. Learning with Confident Examples: Rank Pruning for Robust Classification with Noisy Labels. Curtis Northcutt, Tailin Wu, and Isaac Chuang Regression Tutorial with the Keras Deep Learning Library in Python 08.06.2016 · Keras is a deep learning library that wraps the efficient numerical libraries Theano and TensorFlow. In this post, you will discover how to develop and evaluate neural network models using Keras for a regression problem. After completing this step-by-step tutorial, you will know: How to load a CSV dataset and make it available to Keras How to create a neural …

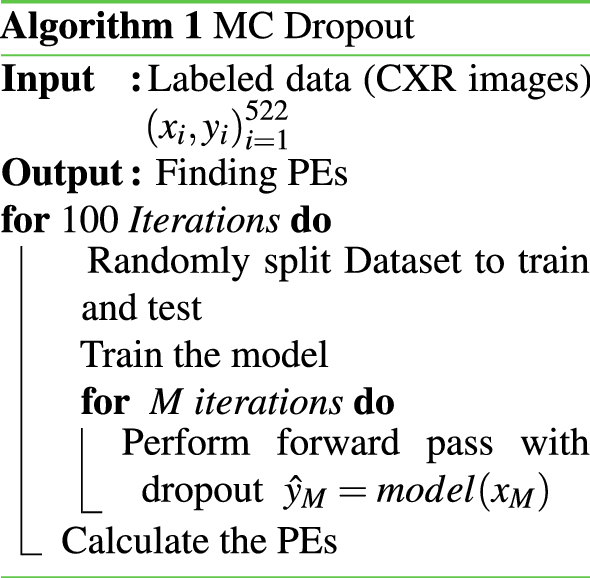

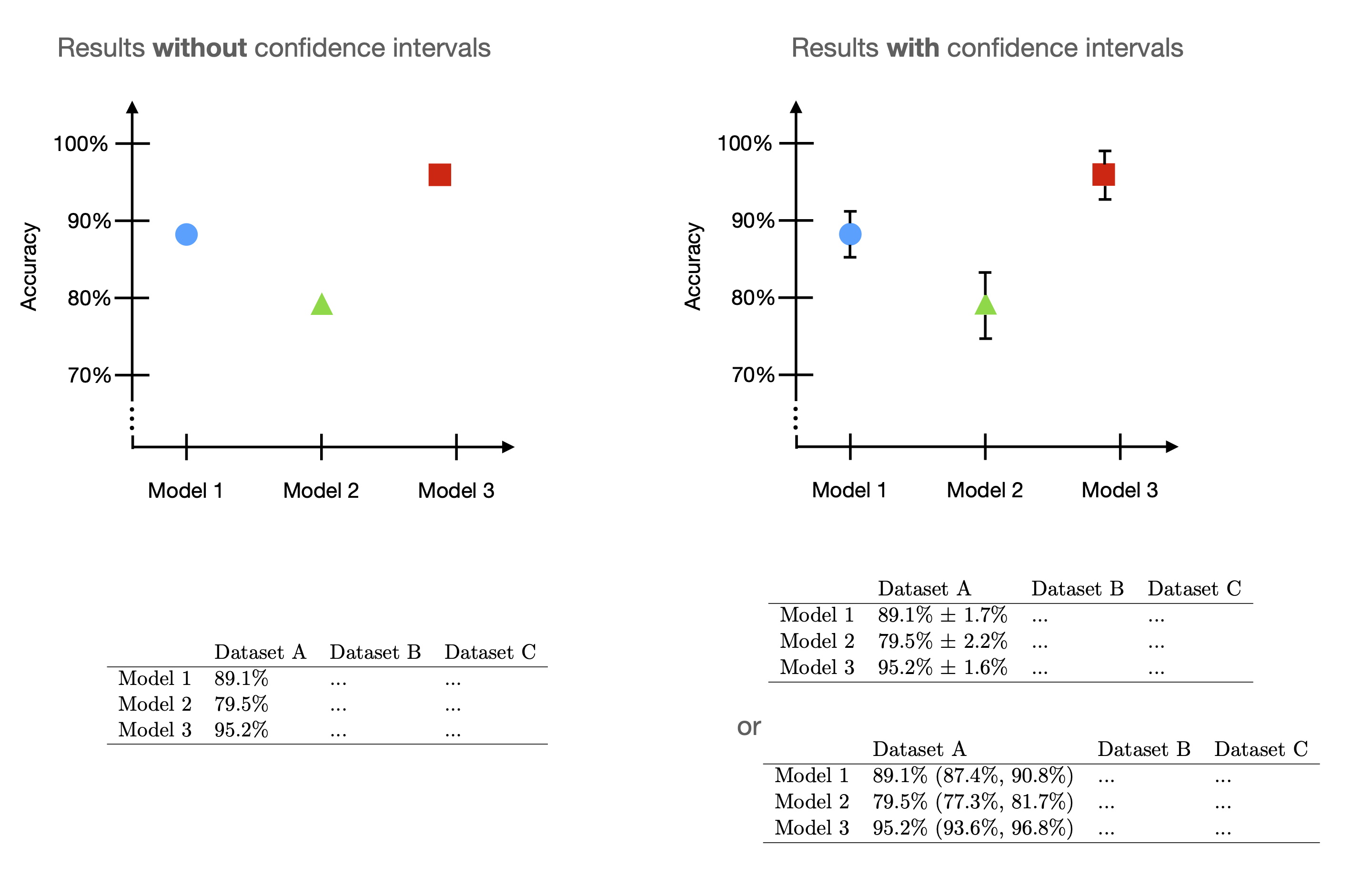

Data Noise and Label Noise in Machine Learning Aleatoric, epistemic and label noise can detect certain types of data and label noise [11, 12]. Reflecting the certainty of a prediction is an important asset for autonomous systems, particularly in noisy real-world scenarios. Confidence is also utilized frequently, though it requires well-calibrated models.

Confident learning estimating uncertainty in dataset labels

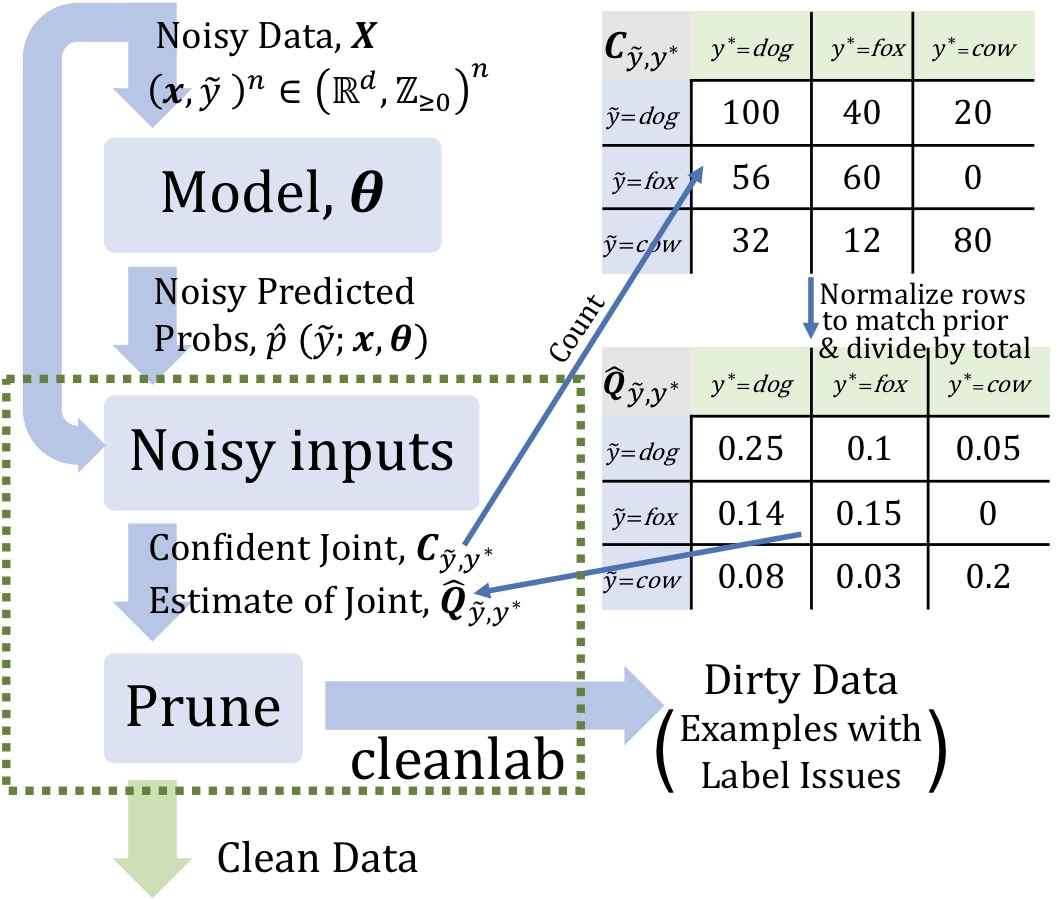

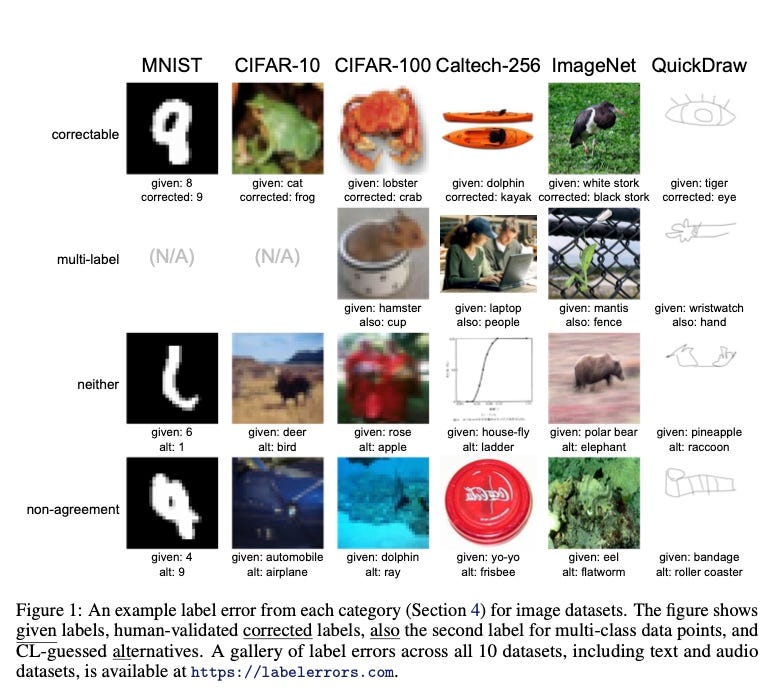

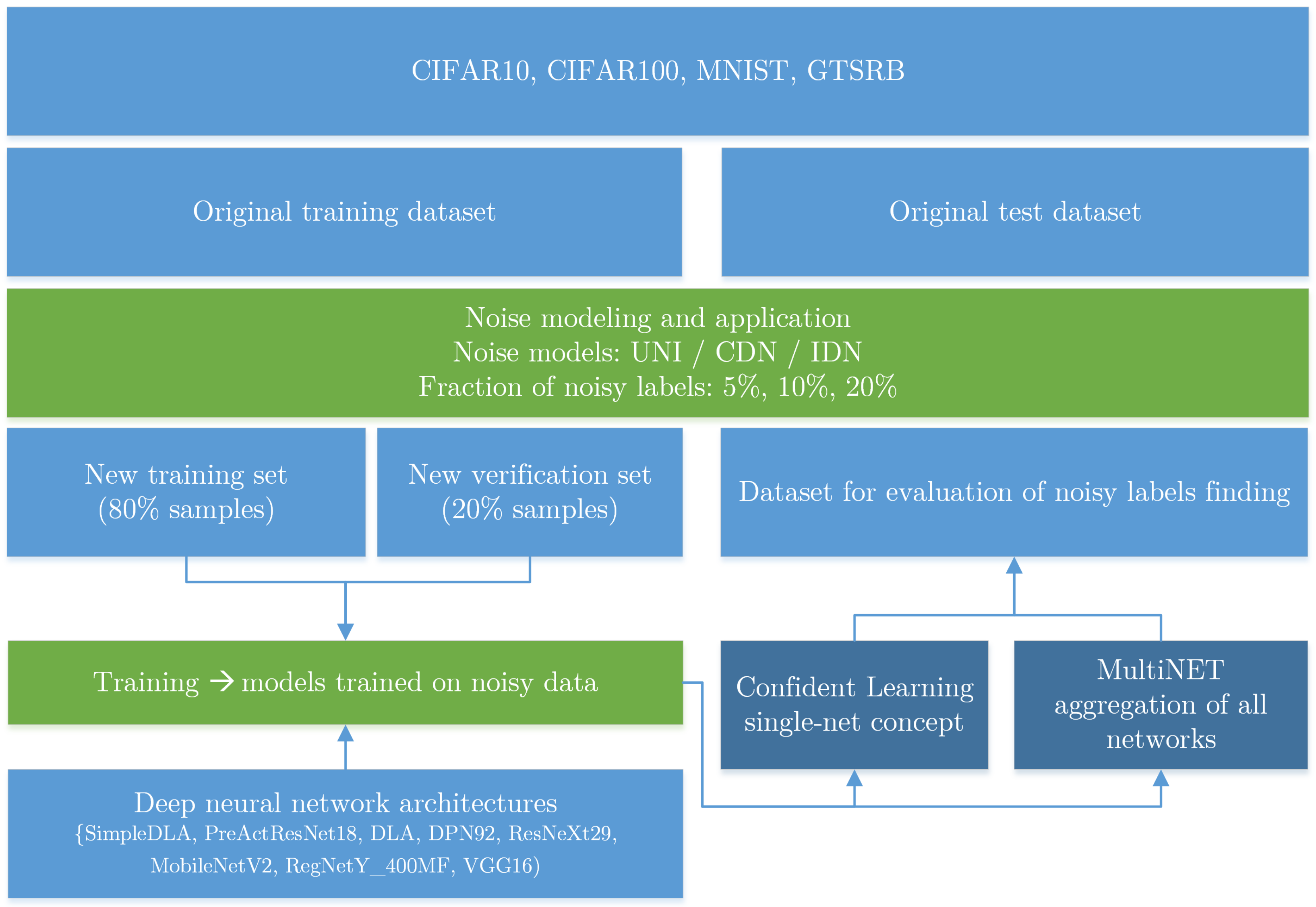

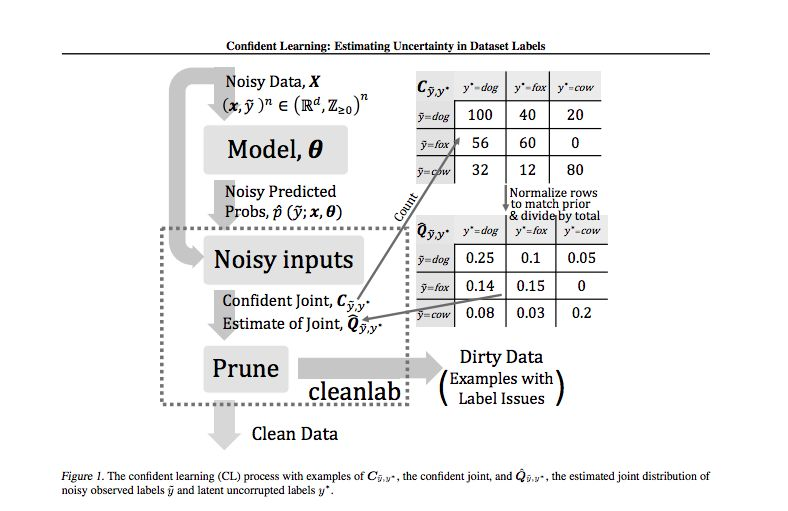

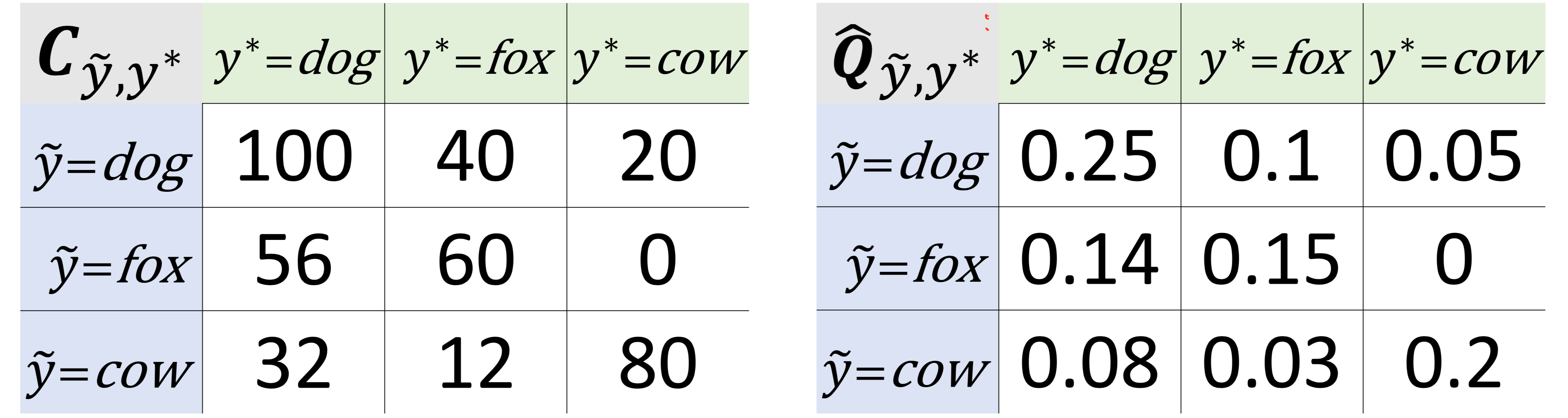

Characterizing Label Errors: Confident Learning for Noisy-Labeled Image ... 2.2 The Confident Learning Module. Based on the assumption of Angluin , CL can identify the label errors in the datasets and improve the training with noisy labels by estimating the joint distribution between the noisy (observed) labels \(\tilde{y}\) and the true (latent) labels \({y^*}\). Remarkably, no hyper-parameters and few extra ... Find label issues with confident learning for NLP In this article I introduce you to a method to find potentially errorously labeled examples in your training data. It's called Confident Learning. We will see later how it works, but let's look at the data set we're gonna use. import pandas as pd import numpy as np Load the dataset From kaggle: Adam: A Method for Stochastic Optimization - ResearchGate Dec 22, 2014 · This constraint restricts the learning task to solely estimating pre-defined shape descriptors from 3D images and imposes a linear relationship between this shape representation and the output (i ...

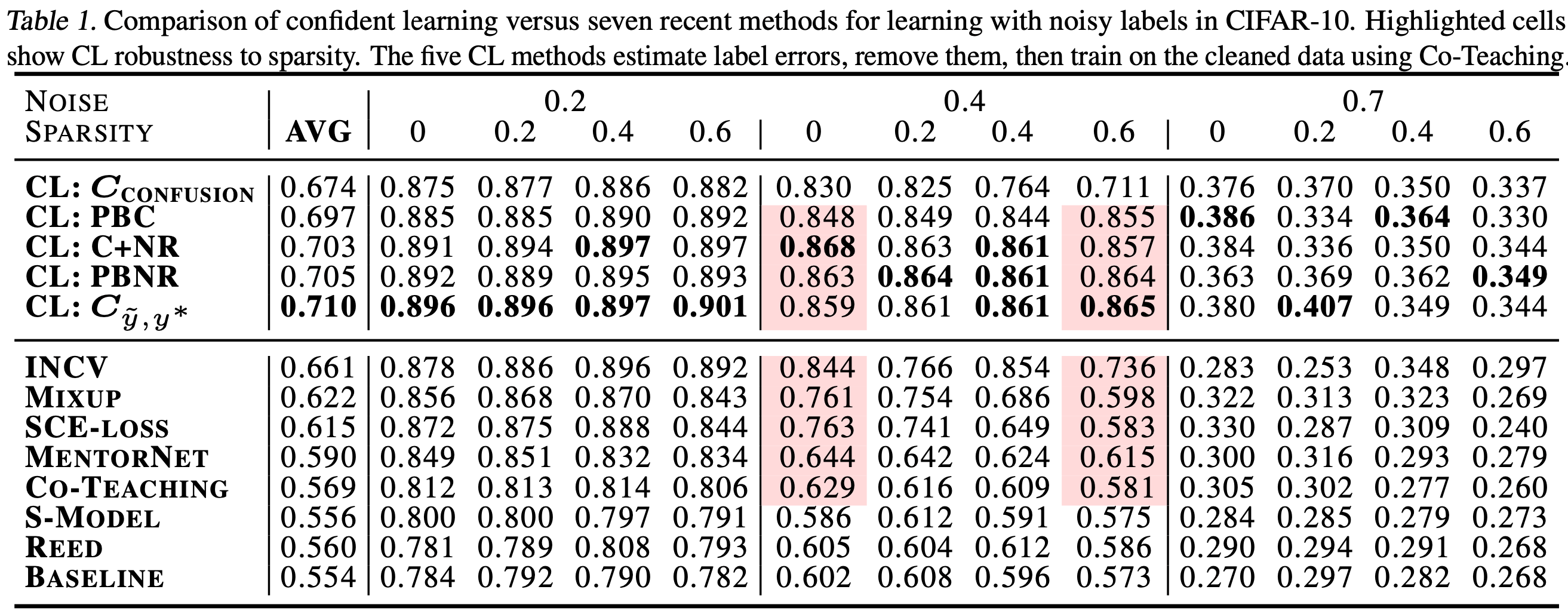

Confident learning estimating uncertainty in dataset labels. Learning with noisy labels | Papers With Code Confident Learning: Estimating Uncertainty in Dataset Labels. cleanlab/cleanlab • • 31 Oct 2019. Confident learning (CL) is an alternative approach which focuses instead on label quality by characterizing and identifying label errors in datasets, based on the principles of pruning noisy data, counting with probabilistic thresholds to ... transferlearning/awesome_paper.md at master · jindongwang Sep 13, 2022 · IEEE-TMM'22 Uncertainty Modeling for Robust Domain Adaptation Under Noisy Environments . Uncertainty modeling for domain adaptation 噪声环境下的domain adaptation; MM-22 Making the Best of Both Worlds: A Domain-Oriented Transformer for Unsupervised Domain Adaptation. Transformer for domain adaptation 用transformer进行DA [R] Announcing Confident Learning: Finding and Learning with Label ... Confident learning (CL) has emerged as an approach for characterizing, identifying, and learning with noisy labels in datasets, based on the principles of pruning noisy data, counting to estimate noise, and ranking examples to train with confidence. Confident Learning: : Estimating ... Confident Learning: Estimating Uncertainty in Dataset Labels theCIFARdataset. TheresultspresentedarereproduciblewiththeimplementationofCL algorithms,open-sourcedasthecleanlab1Pythonpackage. Thesecontributionsarepresentedbeginningwiththeformalproblemspecificationand notation(Section2),thendefiningthealgorithmicmethodsemployedforCL(Section3)

The NLP Index - Quantum Stat Given this finding, we develop a framework for dataset development that provides a nuanced approach to detecting machine generated text by having labels for the type of technology used such as for translation or paraphrase resulting in the construction of SynSciPass. By training the same model that performed well on DAGPap22 on SynSciPass, we show that not only is the … Confident Learningは誤った教師から学習するか? ~ tf-idfのデータセットでノイズ生成から評価まで ~ - 学習する天然 ... ICML2020に Confident Learning: Estimating Uncertainty in Dataset Labels という論文が投稿された。 しかも、よく整備された実装 cleanlab まで提供されていた。 今回はRCV1-v2という文章をtf-idf(特徴量)にしたデー タセット を用いて、Confident Learning (CL)が効果を発揮するのか実験 ... (PDF) Confident Learning: Estimating Uncertainty in Dataset Labels Confident Learning: Estimating Uncertainty in Dataset Labels Authors: Curtis G. Northcutt Massachusetts Institute of Technology Lu Jiang Google Inc. Isaac L. Chuang Abstract and Figures Learning... Confident Learning: Estimating Uncertainty in Dataset Labels Confident learning (CL) is an alternative approach which focuses instead on label quality by characterizing and identifying label errors in datasets, based on principles of pruning noisy data, counting with probabilistic thresholds to estimate noise, and ranking examples to train with confidence.

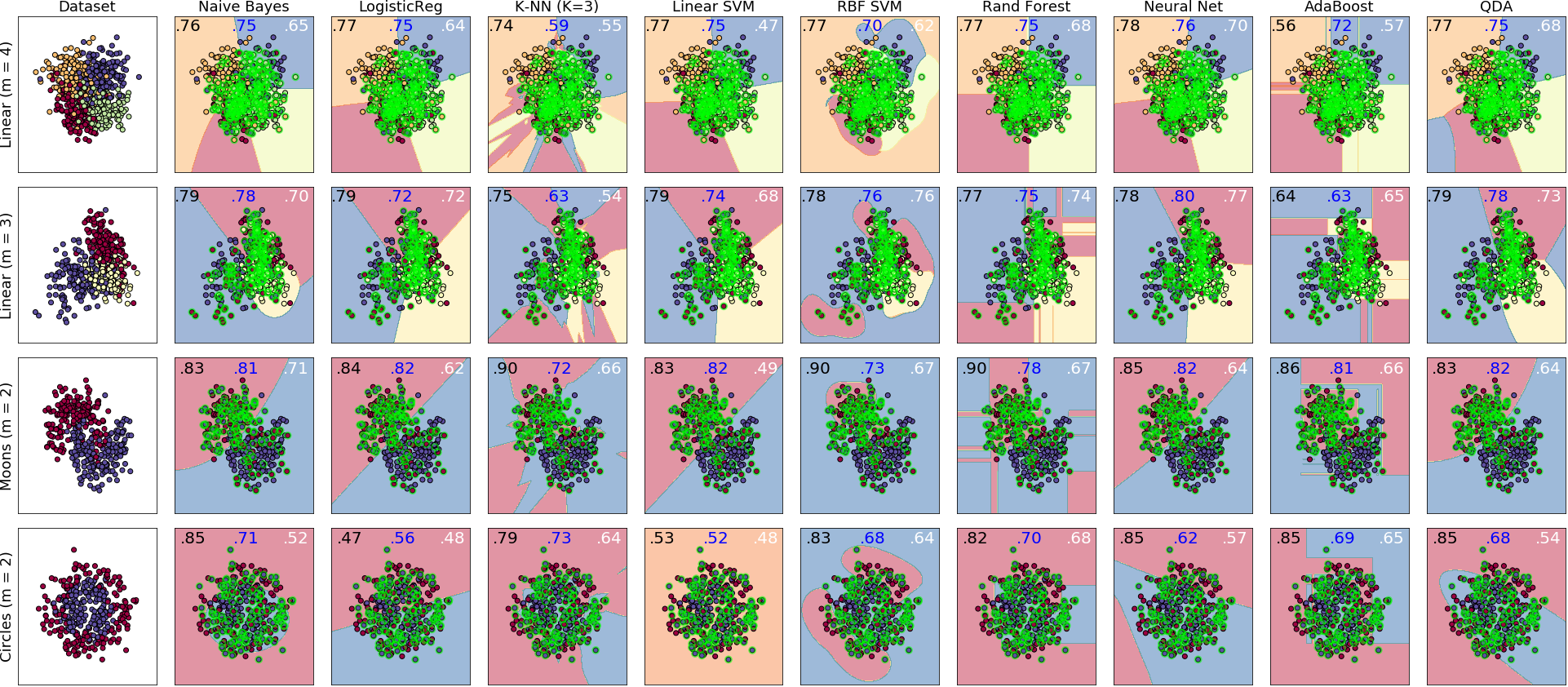

Hands on Machine Learning with Scikit Learn Keras and … An underlying statistical learning algorithm will have its own set of parameters. In the case of a multiple linear or logistic regression these would be the β i coefficients. In the case of a random forest one such parameter would be the number of underlying decision trees to use in the ensemble. Once applied to a trading model other parameters might be entry 175 . Download … Confident Learning: Estimating Uncertainty in Dataset Labels Confident learning (CL) has emerged as an approach for characterizing, identifying, and learning with noisy labels in datasets, based on the principles of pruning noisy data, counting to estimate noise, and ranking examples to train with confidence. An Introduction to Confident Learning: Finding and Learning with Label ... This post overviews the paper Confident Learning: Estimating Uncertainty in Dataset Labels authored by Curtis G. Northcutt, Lu Jiang, and Isaac L. Chuang. If you've ever used datasets like CIFAR, MNIST, ImageNet, or IMDB, you likely assumed the class labels are correct. Surprise: there are likely at least 100,000 label issues in ImageNet. GitHub - cleanlab/cleanlab: The standard data-centric AI package … Fully characterize label noise and uncertainty in your dataset. s denotes a random variable that represents the observed, ... {Confident Learning: Estimating Uncertainty in Dataset Labels}, author={Curtis G. Northcutt and Lu Jiang and Isaac L. Chuang}, journal={Journal of Artificial Intelligence Research (JAIR)}, volume={70}, pages={1373--1411 ...

KDD '22: Proceedings of the 28th ACM SIGKDD Conference on … To fill the gap, this paper proposes a novel learning framework that explicitly quantifies vector labels' registration uncertainty. We propose a registration-uncertainty-aware loss function and design an iterative uncertainty reduction algorithm by re-estimating the posterior of true vector label locations distribution based on a Gaussian process. Evaluations on real-world datasets in …

Confident Learning: Estimating Uncertainty in Dataset Labels Confident learning (CL) is an alternative approach which focuses instead on label quality by characterizing and identifying label errors in datasets, based on the principles of pruning noisy data, counting with probabilistic thresholds to estimate noise, and ranking examples to train with confidence.

Book - NIPS Reliable and Trustworthy Machine Learning for Health Using Dataset Shift Detection Chunjong Park, Anas Awadalla, Tadayoshi Kohno, ... Semi-Supervised Learning with Extremely Limited Labels Sheng Wan, Yibing Zhan, Liu Liu, Baosheng Yu, Shirui Pan, Chen Gong; Collaborative Uncertainty in Multi-Agent Trajectory Forecasting Bohan Tang, Yiqi Zhong, Ulrich Neumann, …

PDF Confident Learning: Estimating Uncertainty in Dataset Labels - ResearchGate Confident learning estimates the joint distribution between the (noisy) observed labels and the (true) latent labels and can be used to (i) improve training with noisy labels, and (ii) identify...

Confident Learning - Speaker Deck Confident Learning データの品質向上に使える Confident Learning についての解説資料です。 実際に使ってみた事例は今後追加していければと思います。 この資料は Money Forward 社内で開かれた MLOps についての勉強会のために作成しました。 ## Reference Pervasive Label Errors in Test Sets Destabilize Machine Learning Benchmarks

ctrl/confident_learning.py at master · chang-yue/ctrl Contribute to chang-yue/ctrl development by creating an account on GitHub.

Noisy Labels are Treasure: Mean-Teacher-Assisted Confident Learning for ... Specifically, with the adapted confident learning assisted by a third party, i.e., the weight-averaged teacher model, the noisy labels in the additional low-quality dataset can be transformed from 'encumbrance' to 'treasure' via progressive pixel-wise soft-correction, thus providing productive guidance. Extensive experiments using two ...

Chipbrain Research | ChipBrain | Boston Confident Learning: Estimating Uncertainty in Dataset Labels By Curtis Northcutt, Lu Jiang, Isaac Chuang. Learning exists in the context of data, yet notions of confidence typically focus on model predictions, not label quality. Confident learning (CL) is an alternative approach which focuses instead on label quality by characterizing and ...

A guide to machine learning for biologists - Nature Sep 13, 2021 · In supervised machine learning, the relative proportions of each ground truth label in the dataset should also be considered, with more data required for machine learning to work if some labels ...

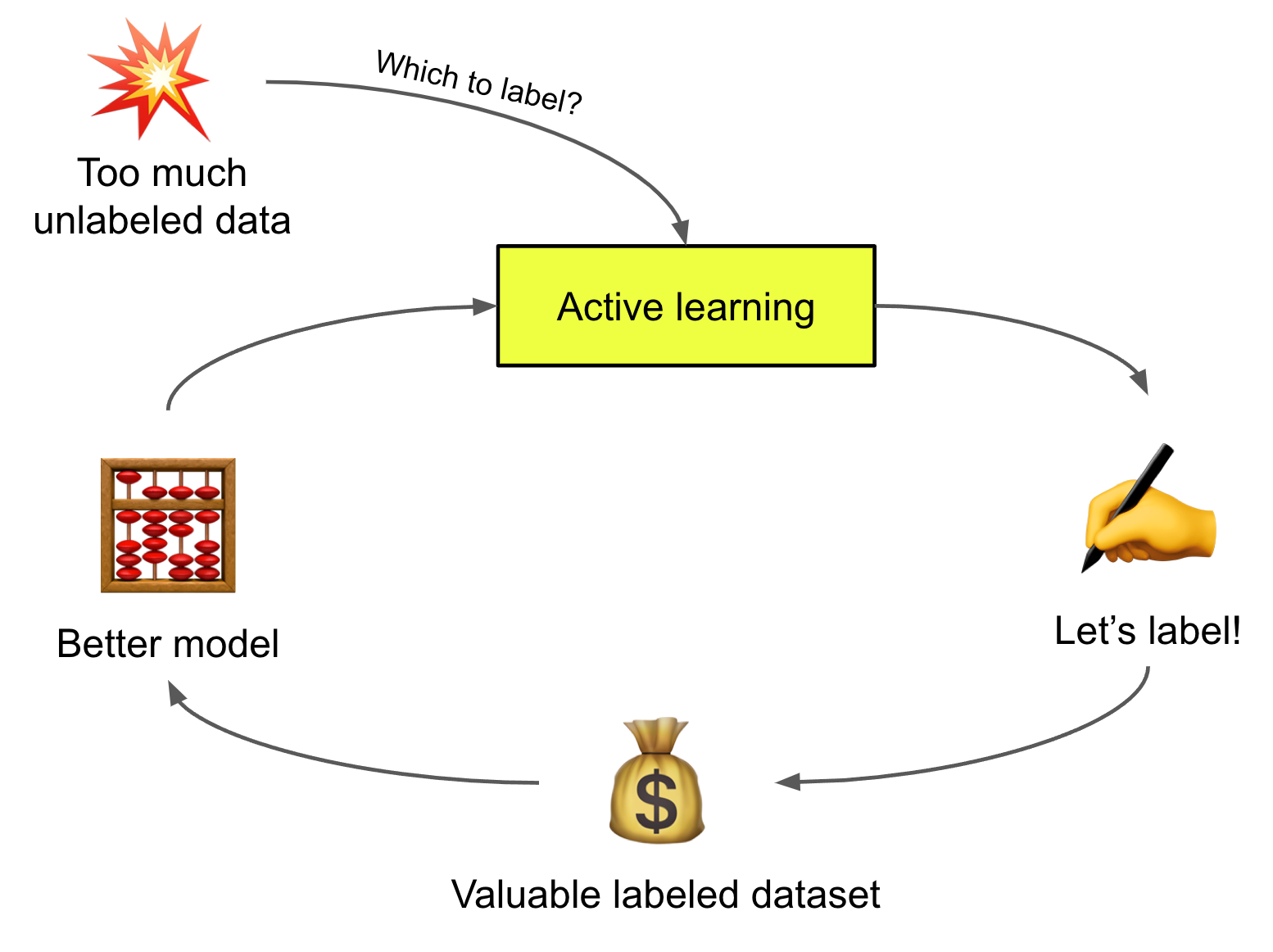

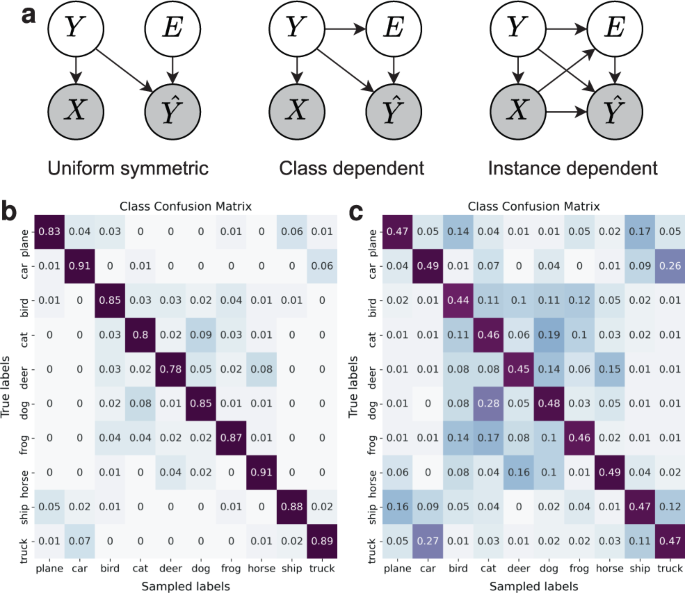

Are Label Errors Imperative? Is Confident Learning Useful? Confident learning (CL) is a class of learning where the focus is to learn well despite some noise in the dataset. This is achieved by accurately and directly characterizing the uncertainty of label noise in the data. The foundation CL depends on is that Label noise is class-conditional, depending only on the latent true class, not the data 1.

Tag Page | L7 This post overviews the paper Confident Learning: Estimating Uncertainty in Dataset Labels authored by Curtis G. Northcutt, Lu Jiang, and Isaac L. Chuang. machine-learning confident-learning noisy-labels deep-learning

ReaLSAT, a global dataset of reservoir and lake surface area Jun 21, 2022 · Impact of bias in errors and missing data: As mentioned earlier in the methods section, based on our observation, the confidence of water labels is higher than land labels in the GSW dataset. To ...

《Confident Learning: Estimating Uncertainty in Dataset Labels》论文讲解 我们从以数据为中心的角度去考虑这个问题,得出假设:问题的关键在于 如何精确、直接去特征化 数据集中noise标签的 不确定性 。 "confident learning"这个概念被提出来解决 这个不确定性,它有两个方面比较突出。 第一,标签噪音,仅仅依赖于潜在的真实class。 比如,豹子常常被错标为美洲狮,而不是澡盆。 第二,真实标签和噪音标签的联合分布可以被直接pursued,根据三大理论支撑:(噪音)剪枝;计数;排列。 对噪音数据和uncorrupted label的联合概率分布进行 直接评估 ,是一件很有挑战又有价值的事情,以前没人这么干过。 对带噪音学习来说,训练前移除错误标签 是一个很有效果的手段。 对于噪音数据的 发掘、理解、学习来讲,这篇论文有2个比较重要的成果。

Adam: A Method for Stochastic Optimization - ResearchGate Dec 22, 2014 · This constraint restricts the learning task to solely estimating pre-defined shape descriptors from 3D images and imposes a linear relationship between this shape representation and the output (i ...

Find label issues with confident learning for NLP In this article I introduce you to a method to find potentially errorously labeled examples in your training data. It's called Confident Learning. We will see later how it works, but let's look at the data set we're gonna use. import pandas as pd import numpy as np Load the dataset From kaggle:

Characterizing Label Errors: Confident Learning for Noisy-Labeled Image ... 2.2 The Confident Learning Module. Based on the assumption of Angluin , CL can identify the label errors in the datasets and improve the training with noisy labels by estimating the joint distribution between the noisy (observed) labels \(\tilde{y}\) and the true (latent) labels \({y^*}\). Remarkably, no hyper-parameters and few extra ...

![PDF] Confident Learning: Estimating Uncertainty in Dataset ...](https://d3i71xaburhd42.cloudfront.net/6482d8c757bce9b0d208d37d4b09e4805faae983/28-Figure14-1.png)

![PDF] Confident Learning: Estimating Uncertainty in Dataset ...](https://d3i71xaburhd42.cloudfront.net/6482d8c757bce9b0d208d37d4b09e4805faae983/7-Figure2-1.png)

![PDF] Confident Learning: Estimating Uncertainty in Dataset ...](https://d3i71xaburhd42.cloudfront.net/6482d8c757bce9b0d208d37d4b09e4805faae983/26-Figure11-1.png)

![PDF] Confident Learning: Estimating Uncertainty in Dataset ...](https://d3i71xaburhd42.cloudfront.net/6482d8c757bce9b0d208d37d4b09e4805faae983/29-Figure16-1.png)

![PDF] Confident Learning: Estimating Uncertainty in Dataset ...](https://d3i71xaburhd42.cloudfront.net/6482d8c757bce9b0d208d37d4b09e4805faae983/21-Table5-1.png)

Post a Comment for "39 confident learning estimating uncertainty in dataset labels"